McEs, A Hacker Life

I HATE prelink

While making last-minute clean ups for

preload to handle deleted binaries and shared objects nicely, I figure out a disaster, which is

prelink changing binaries

every #^@&ing night :-(.

What happens then is that preload simply loses all the context information it has gathered about the binary, since it's a new binary there in place...

So I have to ignore the part of the file name after the magic string ".#prelink#.". What a hack...

Google Talk

Like everybody else is putting up their

Google Talk id, mine is behdad.esfahbod.

FFtAM with your common sense :-).

Last days

The deadline for the

Google Summer of Code is approaching rather fast, and I find myself hacking in the office for 48 hours, sleeping for 12, repeat. The code is slowly getting into CVS, check

preload.

Lots of good news from the

Gtk+ team, and lots of releases too. It's good to see that all the "

Gtk+-2.8 is slow/immature" hype vapored away and we are offered a rock-slid toolkit once again. And of course there's

Project Ridley too. Thanks

Gtk+ team, thanks Matthias Clasen, Thanks

Owen Taylor, Thanks

Keith Packard and Thank

Carl Worth.

On another note, weirdly enough, in the past 48 hours I've received offers for interview from Microsoft

and Amazon. As I get more near to finishing my Masters, I'm starting to worry about ending up in Seattle, which is unfortunate given my interest in Linux and Open Source, but there's not much more I can do about that. I prefer the Boston conspiracy.

Here is my résumé in case you are interested.

It's all Love and Nothing More

Less than five hours after my

call for Love(!) (for

this bug) somebody sends them all over to me via email (

GNOME has been experiencing hardware problems). I go to sleep after a day or two and wake to find out somebody else has uploaded another full set of icons to the bug! Golden Hearts go to Nigel Tao and Francesco Sepic for their dedication, a Bronze Heart to Chris Scobell, and Honorable Mention for Roger Svensson. (Now we are waiting for a good sould to hack up a patch :).)

jdub: Yeah, and Fedora is the

♥iest of Linux distros, so a red one. :P

(Such a gucharmap'ic post)

Pigeons

What do you call a

p.g.o member? A 'p.g.o'n:

$ grep ^'p.g.o'n$ /usr/share/dict/words

pigeon

(see also

PigeonRank)

GNOME Love Wanted

Here is a pretty educational way to start contributing to GNOME as well as getting a tour of Unicode, all needed is a recent GNOME with Evince installed and some free time:

Bug 313496: Add iconic script indicators to gucharmapYes!

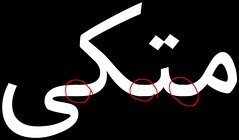

Arabic Joining Rendering Problem

I'm been thinking about this weird problem that

FarsiWeb fonts look bad at small sizes. The effect was like some glyphs being placed one pixel above where they should be and so an unwanted shift when they

join to the neighboring glyphs. I discovered what's going on when accidentally rendered a sample text in huge font with inverse colors. A reworked sample is the image exhibited in this post. See it at full size

here. If you don't see the problem, try enlarging the photo.

The problem is two-fold, and by far one of the hardest problems I have faced so far in Persian computing. In short: A) The glyphs in the font have tails spanning out of the glyph extents. B) When rendered with anti-aliasing on, the overlapped section becomes darker, due to being rendered twice.

I need to elaborate on why glyphs have those long tails in the first place. Since anti-aliased rendering is a relatively new phenomenon and many older renderers have had rounding bugs, etc, Arabic font designer have mostly opted for have glyphs that overlap, to make sure no white gap shows up at the joint. I have witnessed such a problem myself with DVI/PS/PDF viewers quite a few times while working on

FarsiTeX. The solution again, have been to mechanically add some extra tail to make sure no gap is rendered. But now with anti-aliased rendering, it's causing problem.

The overlapping glyphs is what Keith Packard calls a broken font. Seems like Xft already takes extra care of joining glyphs, pre-adding the joints such that they don't look weird. So all we need is fonts that are not broken, which is, well, reasonable. Seeking for another fix may fail miserably, since otherwise you need to either 1) use conjoint operators to add the glyphs to a temporary surface and compose it to destination afterward, but keithp says even that doesn't quite work, or 2) merge all the glyph paths and draw them in one operation. This one sucks performance-wise, sine each glyph will be drawn from path every time, instead of the usual fast path of getting FreeType render glyph alpha masks that are cached on the server by Xft...

But there's one more reason to have broken fonts: We use that in our justification algorithm in Persian TeX systems. To implement proper Arabic justification in TeX, there are two ways: 1) use rules (black boxes), this is the easiest, but suffers from the same broken-viewers problem: the rules will be rendered with solid black edges, while neighboring edge from the glyphs is antialiased. 2) use cleaders to repeat a narrow joining glyph. This eats a lot of resources in the final output and rendered, but at least looks better. But since cleaders insert an integer number of those glyphs, we need to somehow fill the remainder, and we do that by having glyphs with joining tails extended out of their box. Is there another way to do justification without broken fonts? Maybe. With a PostScript backend for example, one may be able to write PS hacks to convert an stretchable rule or glue into an scaled joining glyph. Or we may be able to add support for that to pdfTeX. They have recently added a bunch of types, like floating point registers, etc. They are quite open to useful extensions. It may even be solved by

PangoTeX, by the time it happens,

Pango would definitely have support for Arabic justification :-).

There is a partial third solution: Use hinting to make sure the baseline of the font occupies a full number of aligned pixels, such that double-painting doesn't make any difference. But that seems to be harder to achieve than just cutting the tails.

Misc

Back from a week in Hamilton, Ontario spending time hacking with friends. Happened to meet

desrt of

GNOME IRC over coffee too. I've received some very encouraging feedback for my

PangoTeX post, none of them technical though.

An interesting not-so-much-flame war has been happening while I was recovering from accumulated jetlag problem over the past week. Hopefully we will have a much better panel, applets, and notification system at 10. To make Davyd happy, I've got a heck a lot of applets on my panel after the recent installation of FC4, listing process names:

- wcnk (all three of them)

- multiload (with all resources)

- mixer (has problems every other day)

- clock (wish there were more compact date formats)

- battstat

- gweather

- netstatus (1 for eth0, 1 for ath0, argh)

- cpufreq

- keyboard

- geyes

- notification area

so it's not hard to imagine that I run out of panel space. I wish there was a way to hide the text in "Applications Places Desktop" menus that take a good twenty something percent of my top panel space.

Best of

color perception. I'm still amazed by the first one. The second is reasonable to handle, but the third one made me wondering that I would have been amused if it was otherwise! I mean, your brain is filtering out the yellow/blue filter before deciding what the joint is colored

in reality, but what you are comparing is the color after the filter applied! It's like having a photo of car A colored navy blue in a dark night, and a photo of car B colored deep dark blue in the daylight. Not surprisingly your brain has no problem identifying car A to be lighter colored than car B, but do GIMP and the two are the same color, not surprised! It's this processing that makes you not wonder why objects change color in different lighting conditions! Anyway, it's still surprising.

In Bob Dylan news,

No Direction Home set will be released

just in time for my b-day too, so you've got a lot of choice(!), as the set consists of a DVD by Martin Scorsese, the soundtrack, and the book. In case there's no indication of I getting any gift of those DVDs, I'll go on and watch it on PBS right on my birthday :-). Ha ha.

I'm deep busy with the

Google Summer of Code project of mine. A report to come soon. Also decided to attend

Google Code Jam 2005 and the

online IOI 2005! I don't have any chance at

Code Jam, since they don't allow C, only C++, Java, C#, and VB (yes, they require you to write classes with given interface), but I'm curious to see how my abilities have changed after five years: whether I still can do a tricky backtrack, a heuristic, and a graph problem in five hours of contest time with enough accuracy... I guess I've improved in the code quality and speed, but am not familiar with latest heuristic tricks that circulate in the IOI community.

Finally, after having to type the URLs for

pango,

dasher,

cairo,

gnome,

fedora, etc too many times, hacked up a script to automatically link them :-). The little PHP script

linkage uses

a list of patterns and URLs to hyperize my blog posts. No reason to use PHP really, Python could do that as well. There are some things that I still feel better to use PHP for and heavy regexp is one of them. The links file on my laptop grows with every blog post. My RSI-suffering fingers appreciate it a lot. The heavily linked

PangoTeX post was the motivation to code that thing.

Pango+TeX Follow-Up

A few readers asked me to elaborate on why I think a

Pango-enabled TeX is useful, how does it work, and what to anticipate. In this post I'm calling such a combination PangoTeX. To understand why PangoTeX is useful, we need to know what each of them is good at, and what not.

TeX is pretty good at breaking paragraphs into lines and composing paragraphs into pages. What it is not good at is complex text layout, means, non-one-to-one character to glyph mapping and more than one text direction. e-TeX has primitives to set text direction, but not more.

Pango on the other hand, knows nothing about pages and columns. It does break paragraphs too, but not anything to envy. Patches exist that implement the TeX

h&j algorithm for

Pango, but that doesn't matter here. What

Pango is pretty good at is the character to glyph mapping, where it implements the

OpenType specification for quite a bunch of scripts.

Pango contains modules for the following scripts: Arabic, Hebrew, Indic, Khmer, Tibetan, Thai, Syriac, and a Burmese module is recently proposed. Other than that, it has a module to use

Uniscribe on Windows, and there's also a module available on internet (that may be integrated into

Pango soon) to use the

SIL Graphite engine.

So the plan is to make TeX pass streams of characters to

Pango and ask it to shape them. The way

XeTeX is implemented is that TeX passes to the higher-level rendering engine words of text and all it asks for is the width the word would occupy.

XeTeX has backends for Apple

ATSUI and

ICU at this time.

Pango has the advantage of abstracting

OpenType in general,

Uniscribe,

Graphite, and hopefully

ATSUI in the future. So it would be enough to only have a

Pango backend.

That level of integration is pretty much what

XeTeX does, which is quite useful on its own, but doesn't mean it should be the end of it. Much more can be done by using

Pango's language/script detection features, it's bidirectional handling engine, etc, such that you don't have to mark left-to-right and right-to-left runs manually. Moreover, while doing this, we would introduce the

Unicode Character Database to TeX, such that (for example) character category codes would be automatically set for the whole BMP range, and you may query other properties of characters should need be.

The way

Omega approached the problem of

Unicode+TeX was to add a push-down automaton layer that could convert the character stream at as many stages as desired. So you could have an input layer to convert from legacy character sets to

Unicode, and then a complex shaping engine, and finally convert to font encoding. The problem with this approach is that it's very complex, so it introduced a zillion bugs. Of course, bugs can be fixed, but just then comes the next problem: Duplication. The powerful idea behind having shaping information in

OpenType fonts was left unused there. For each font you had to implement the shaping logic (ligatures, etc) in an

Omega Transformation Format file. Moreover, the whole machinery was more like Apple's AAT, rather than

OpenType, which means it doesn't have any support for individual scripts: If you want to do Arabic shaping, you have to code all the joining logic in OTF. Neither did it provide

Unicode character properties. If you need to know whether a character is a non-spacing mark, you have to list all NSMs in an OTF file. If you wanted to normalize the string, well, you had to code normalization in OTF, which is quite possible and interesting to code, but don't ask me about performance... Putting all these together, I believe that

Omega cannot become a unified

Unicode rendering engine without introducing support from outside libraries. When you do import some support from

Pango and gNUicode for example, all in a sudden you do not need all that push-down automata anymore. A charset conversion input layer that uses iconv is desirable though.

About the output layer,

XeTeX generates an extended DVI and converts it to PDF afterwards, using a backend-specific extended DVI driver. We can do that with

Pango+

Cairo too, to write to a PDF or PS backend. Or since

Pango computes glyph-strings when analyzing the text, we may not even need

Pango when converting the DVI to PDF. Anyway, what I'm more interested is to expand pdfTeX directly. We don't really need DVI these days. As I said before, the assumptions that Knuth made have proved to be wrong in the new millennium. It's not like the same DVI would render the same everywhere, no, you need the fonts. That's why fonts used in today's TeX systems is separate from fonts you use to render your desktop to you screen, because they are isolated and packaged separately in a TeX distribution, such that almost everyone has the same set of fonts... This should be changed too, with only having PDF output, and some kpathsea configuration. You still need the fonts to compile the TeX sources, but the output would be portable.

To conclude, I have changed my mind about cleaning up and adding

UCD support to

Omega and believe that we badly need a pdfTeX+

Pango engine to go with our otherwise-rocking

GNOME desktop.

That's all for now. I'm very much interested to get some feedback.

WiFi Speed Spray

As

advertised on eBay.

Pass by Reference in C

When reading

setjmp.h I figured out a nice trick used by glibc hackers to implement pass-by-reference'd data types. It was like Wow! Cool! Beautiful! Automatic memory allocation with no reference operator when passing to functions. This has been indeed part of the design of C and exactly why array types were added to C, yet I never figured out arrays can be used to implement opaque types that are passed by reference.

#include <stdio.h>

typedef struct __dummy_tag {

int x;

} the_type[1];

void

setter(the_type i, int v)

{

i->x = v;

}

int

getter(the_type i)

{

return i->x;

}

int

main(void)

{

the_type v;

setter (v, 10);

printf ("%d\n", getter (v));

setter (v, 20);

printf ("%d\n", getter (v));

return 0;

}

You learn new things everyday...

Update: Apparently

blogger has !@#$ed up my post. Kindly read the code syntax-highlighted and cutely-indented in my blog.

Update2: Originally I had planet blamed instead of blogger in the previous update. Giving up after a few tries to do overstrike on planet, I simply replaced it with blogger.

Back

So I did a little hacking to mirror my

feed such that

blogger does not get upset about

planet fetching too regularly. In the meantime, I was at DDC and OLS and did a lot of hacking on

dasher,

cairo, and

pango. As a result, I'm a cairo programmer (not developer) now. Finally moving on to

preload. GIMPed hackergotchis for

hub and

jg and sent to

jdub too. Man put 'em up.

I just found

XeTeX yesterday. After reading the paper, I believe they are on a right track, as far as comparison to Omega is concerned. Several fundamental assumptions of Knuth's work and era have been proven wrong, and the the arhchivability through code-freeze is no exception. I think the comparison between a self-contained system like TeX/Omega and the XeTeX approach is exactly like C versus Java.

In other words, we can get the idea, plug Pango and Cairo in, and get a decent portable i18n'ized TeX-based typesetting system. No more font and complex-text-layout code duplication... WOW!

Interesting link stolen from

jwz:

WORLDPROCESSOR Catalog.